ru [dot] wang [at] wisc [dot] edu

I’m a final year PhD Candidate in Computer Science at University of Wisconsin-Madison. I’m currently working with Prof. Yuhang Zhao. My research interests include Human-Computer Interaction, Eye Tracking, Extended Reality, Accessibility, and Mental Health. I design and build intent-aware interactive systems to (1) enhance accessibility of visual information, and (2) provide personalized in-situ mental health support.

Before Madison, I received my MS from UCSD where I worked with Prof. Nadir Weibel and Prof. Xinyu Zhang. Prior to UCSD, I received my BS from Shanghai Jiao Tong University.

Sep 2025 Started my internship at Fujitsu Research of America!

Jun 2025 3 papers accepted to ASSETS ‘25! 🎉

May 2025 Passed my preliminary exam! officially a PhD candidate! 🎉

Oct 2024 Invited to Walsh Award Luncheon to share my research with Walsh family and peers at MERI!

Aug 2024 Gave a talk at Optica Vision & Color Summer Data Blast (online)!

Jun 2024 Selected to attend HCIC in Delavan WI (2 PhD students were selected for each member university)!

May 2024 Presented GazePrompt at CHI ‘24 in Honolulu, HI 🌞🏝️!

May 2024 Passed my qual exam! 🎉

Jan 2024 GazePrompt got accepted to CHI ‘24! 🎉

Oct 2023 Presented my poster at ASSETS ‘23 in NYC 🗽!

Aug 2023 Presented my poster (spin-off from my CHI ‘23 paper) at Vision 2023 in Denver, CO ⛰️!

Apr 2023 Presented my paper at CHI ‘23 in Hamburg, Germany 🍺🌭!

Understanding User Intent across Visual Abilities and Tasks via Eye Tracking

Using eye tracking, we compare the gaze behavior of sighted and low vision people during reading, and uncover low vision users’ gaze-level reading challenges under different magnification modes. We further identify the subtasks in image viewing activities and characterize gaze patterns of users with varying visual abilities during these tasks, enabling user intent recognition across visual abilities.

Designing In-Situ AR Intervention to Support OCD Self-Management

We design AI-driven AR interventions that incorporates core strategies of effective OCD therapies (e.g., ERP and ACT). By rendering restrictive visual augmentations on real world objects involved in compulsive behaviors (e.g., hand washing), our system can encourage users to disengage from compulsions, and help them start an in-situ exposure practice.

Gaze‐Aware Visual Augmentations to Enhance Low Vision People's Reading Experience

We improve the gaze data collection process to make eye tracking accessible to low vision users, and identify low vision people’s unique gaze patterns when reading using eye-tracking. Based upon our findings, we further design gaze-aware visual augmentations that enhance low vision users’ reading experience. Our system facilitates line switching/following and difficult word recognition.

Understanding Cooking Challenges for People with Visual Impairment

We conduct contexual inquery study to observe how people with visual impairments (PVI) cook in their own kitchens. We also interview rehabilitation professionals about kitchen related training. Combining the two studies, we seek to understand PVI’s unique challenges and needs in the kitchen and identify design considerations for future assistive technology.

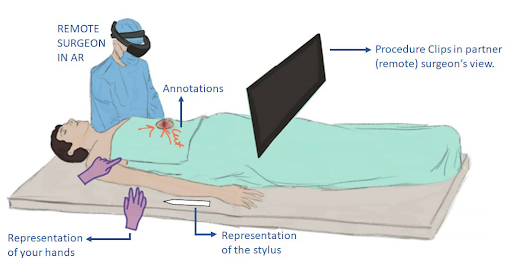

ARTEMIS (Augmented Reality Technology-Enabled reMote Integrated Surgery)

ARTEMIS is a collaborative system for surgical telementoring. The surgical field is recreated for a remote expert in VR, and the remote expert annotations and avatar are displayed into the novice’s field of view in real-time using AR. ARTEMIS supports remote surgical mentoring of novices through synchronous point, draw, and look affordances and asynchronous video clips.

Characterizing Visual Intents for People with Low Vision through Eye Tracking

Ru Wang, Ruijia Chen, Anqiao Erica Cai, Zhiyuan Li, Sanbrita Mondal, Yuhang Zhao

To appear at ASSETS ‘25

“It was Mentally Painful to Try and Stop”: Design Opportunities for Just-in-Time Interventions for People with Obsessive-Compulsive Disorder in the Real World

Ru Wang, Kexin Zhang, Yuqing Wang, Keri Brown, Yuhang Zhao

To appear at ASSETS ‘25

Characterizing Collective Efforts in Content Sharing and Quality Control for ADHD-relevant Content on Video-sharing Platforms

Hanxiu ‘Hazel’ Zhu, Avanthika Senthil Kumar, Sihang Zhao, Ru Wang, Xin Tong, Yuhang Zhao

To appear at ASSETS ‘25

PeerEdu: Bootstrapping Online Learning Behaviors via Asynchronous Area of Interest Sharing from Peer Gaze

Songlin Xu, Dongyin Hu, Ru Wang, Xinyu Zhang

Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI ‘25)

GazePrompt: Enhancing Low Vision People’s Reading Experience with Gaze-Aware Augmentations

Ru Wang, Zach Potter, Yun Ho, Daniel Killough, Linda Zeng, Sanbrita Mondal, Yuhang Zhao

Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI ‘24)

Practices and Barriers of Cooking Training for Blind and Low Vision People (Poster)

Ru Wang, Nihan Zhou, Tam Nguyen, Sanbrita Mondal, Bilge Mutlu, Yuhang Zhao

The 25th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ‘23)

Understanding How Low Vision People Read using Eye Tracking

Ru Wang, Linda Zeng, Xinyong Zhang, Sanbrita Mondal, Yuhang Zhao

Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ‘23)

Characterizing Barriers and Technology Needs in the Kitchen for Blind and Low Vision People

Ru Wang, Nihan Zhou, Tam Nguyen, Sanbrita Mondal, Bilge Mutlu, Yuhang Zhao

arXiv 2023

“I Expected to Like VR Better”: Evaluating Video Conferencing and Desktop Virtual Platforms for Remote Classroom Interactions

Matin Yarmand, Ru Wang, Haowei Li, Nadir Weibel

ISLS 2024

ARTEMIS: A Collaborative Mixed-Reality Environment for Immersive Surgical Telementoring

Danilo Gasques, Janet Johnson, Tommy Sharkey, Yuanyuan Feng, Ru Wang, Zhuoqun Robin Xu, Enrique Zavala, Yifei Zhang, Wanze Xie, Xinming Zhang, Konrad Davis, Michael Yip, Nadir Weibel

Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ‘21)

Approximate Random Dropout for DNN training acceleration in GPGPU

Zhuoran Song, Ru Wang, Dongyu Ru, Zhenghao Peng, Hongru Huang, Hai Zhao, Xiaoyao Liang, Li Jiang

2019 Design, Automation & Test in Europe Conference & Exhibition (DATE 2019)

Winner of CHI 2021 Student Volunteer (SV) happi design contest!